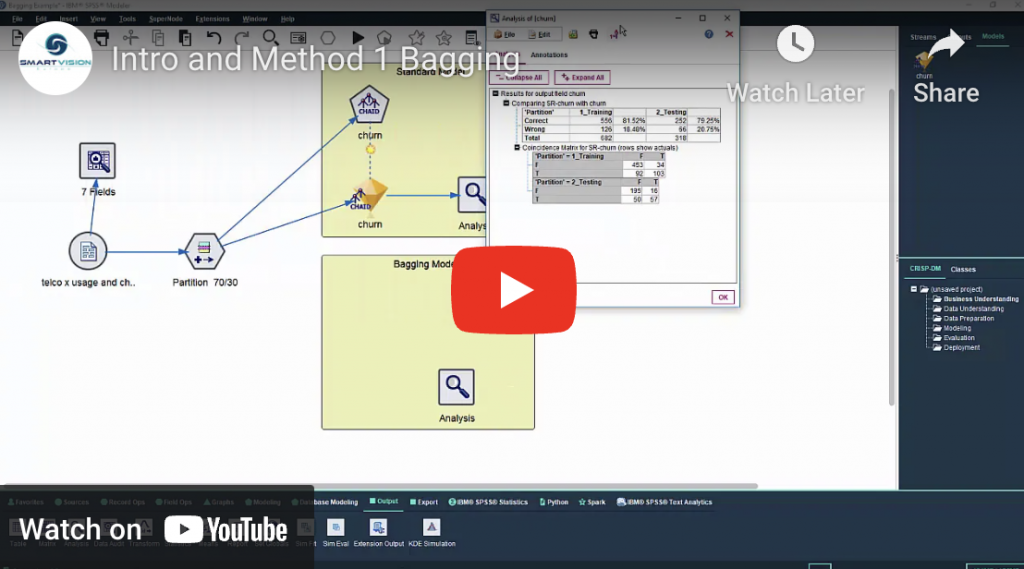

6 secrets of building better models part one: bootstrap aggregation

Bootstrap aggregation, also called bagging, is a random ensemble method designed to increase the stability and accuracy of models. It involves creating a series of models from the same training data set by randomly sampling with replacement the data.