None of us can have failed to notice the recent debacle over Ofqual’s (the Office of Qualifications and Examinations Regulation) use of an algorithm to predict pupil grades. Once again, ‘algorithm fever’ has generated a flurry of news articles questioning whether we are sleepwalking into a dystopian future where human expert decision-making is replaced with high-volume, automated recommendations driven by opaque and frankly sinister, computing power.

To be clear, the row over the use of Ofqual’s algorithm, the subsequent U-turns and the inevitable blame-gaming was, by any standards, a fiasco. But I’m not sure it shed much light on what ‘algorithms’ actually are and why we should be worried or optimistic about their role in our future.

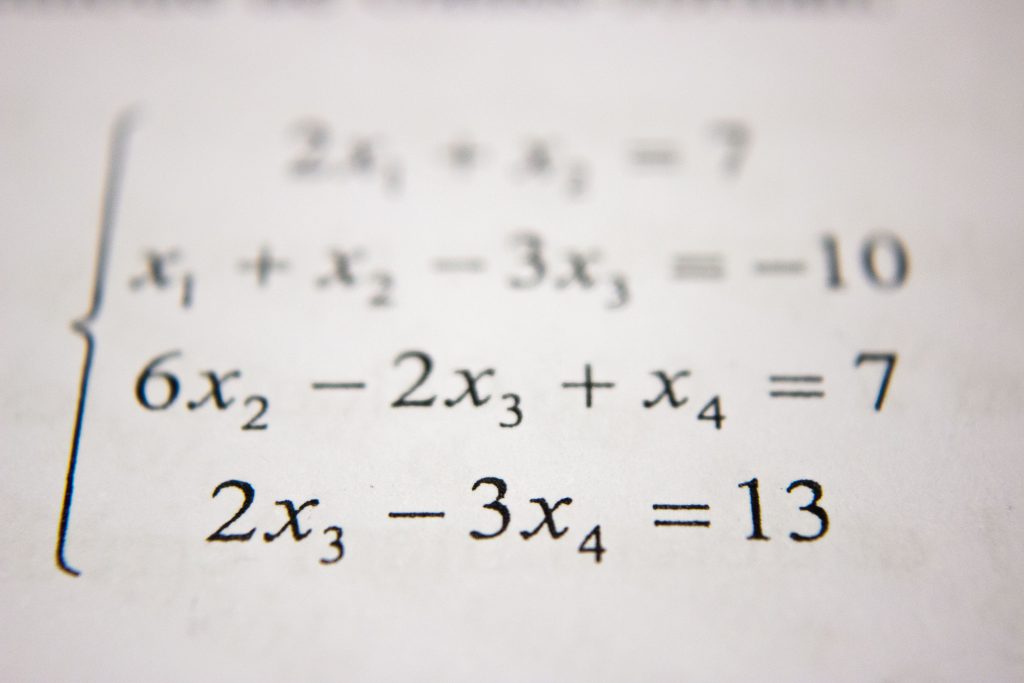

One of the less helpful aspects of news articles concerning algorithms is that journalists are employing a fairly generic term to refer to quite specific applications. An algorithm is a set of instructions or formulae used to solve a problem, perform a task or make a recommendation. Technically, this means algorithms can refer to everything from cake recipes to washing machine programs, facial recognition systems and car insurance calculations.

In the popular press, when journalists use the term algorithm, they are sometimes referring to general AI systems such as those employed by self-driving cars, which of course provide ample material for discussions concerning the ethical, legal and societal ramifications of algorithmic decision-making.

More often though, what they are really talking about are predictive models. In this context, predictive models include those ‘algorithms’ that are used to automatically identify car number plates, estimate an individual’s credit risk or detect skin cancer. But for any student of data analysis, the most important thing to understand about a predictive model is that it is just a model i.e. it is a simplification or representation of something. In this case, that ‘something’ is the sample of data that was used to develop the model. And therein dear reader, lies a can of worms.

Because the data we collect is a mirror, and an often distorted one at that. Data is a reflection of the concerns and assumptions of the people and organisations that collect it. This means that the data we decide to take notice of can be just as important (or unimportant) as the information we assumed was irrelevant or too difficult to obtain. For this reason, predictive models are just as prone to integrating the biases and prejudices of those who developed them, as anything else that is designed and created by specialists for mass consumption. Like cars, or buildings, or pharmaceuticals or school syllabuses.

But before we declare war on algorithms, perhaps we should consider what the non-algorithmic world looks like. We know this world quite well. It’s called history. That’s the world where decisions were made entirely by humans. In fact, for many human societies, critical decisions that affected the lives of millions, were made by people of uncanny similarity i.e. the same sex, age group, ethnicity, educational background, parentage, physical ability, accent and sexuality.

These same people are almost preternaturally ill-disposed to any data that inconveniently questions their objectivity in decision-making or the degree to which their achievements are based on personal merit. Being human, they are excellent at ignoring data that does not confirm their professional or personal world view.

But even though this lack same lack of diversity might often translate to a biased data collection strategy, one of the things that predictive modelling algorithms are really good at, is ignoring factors that simply don’t affect an outcome. Even when these factors are, consciously or unconsciously, assumed to be important. Such as gender, ethnicity or age.

Predictive algorithms are also pretty good at identifying important exceptions to more general rules. In other words, they have an unerring tendency to undermine a lot of ‘received wisdom’. Given this, is it possible that in the decades to come, someone might actually prefer asking an algorithm, rather than say, their boss, to judge how much of a pay rise they should be given? Or what medicines they should be prescribed instead of their GP? Or what academic grade they should be given rather than an examiner?