IBM SPSS Modeler has been through quite the name changes since it first came onto the market as Clementine in the 1990s. In 1998 it was acquired by SPSS. Controversially, in my mind, SPSS then changed its name to SPSS Modeler (spelled the American way which causes no end of confusion in spell checks or when writing documents). Even after nearly 5 years many of its long term fans and user base still refer to it as Clementine and indeed there are still pockets of people out there who don’t know that Clementine and Modeler are the same (rebranded) product. I mention this as it was a source of discussion recently in a meeting with an online retailer I am currently working with.

I have worked in the analytics space for approaching 20 years, much of which has been spent working with clients selecting and implementing SPSS statistics and data mining products. Throughout the various renaming and ownership changes I have seen Modeler remain at the forefront of predictive analytics and data mining. Its longevity and resilience in the face of so much rebranding is, in my opinion because of the follow key factors:

1. It’s an open solution so works with your existing tech.

Modeler is specifically designed to fit with existing technology, infrastructure and legacy systems. Historically the software has taken a “best of breed” approach rather than insisting that an organisation adopt a proprietary data warehouse. As a result the ability to bring data together in multiple formats from many sources to be analysed together gives extreme flexibility. Often a company has spent large amounts of money on a data warehouse, or has a complex matrix of legacy systems. Some of Modeler’s competitors then insist on further investment in a proprietary datamart, requiring additional spend on database and ETL tools. With Modeler you can work with what you’ve already got. You may have already invested in business intelligence tools such as Cognos or BObj – Modeler works with them too and helps you move beyond the limits of business intelligence systems into the realms of real predictive analytics.

2. You can run most of the data mining in your own database.

One of Modeler’s greatest strengths is its ability to perform many data preparation and mining operations directly in the database. Generating SQL code that can be pushed back to the database for execution means that many operations, such as sampling, sorting, deriving new fields, and certain types of graphing, can be performed there rather than on the local computer or server. When you are working with large datasets, these pushbacks can dramatically enhance performance and speed of processing making your modelling quicker and more efficient.

3. Simple deployment of your results to the front line.

Data mining produces a ‘model’ which predicts or scores certain types of behaviour or outcomes. The ability to deploy this intelligence to operational systems and share these results is key to the success of the project. If you can’t use the models, there is little point in producing them. Modeler is very good at deploying models and can export these results as executable files throughout your organization, files which can be updated and modified quickly as the models change.

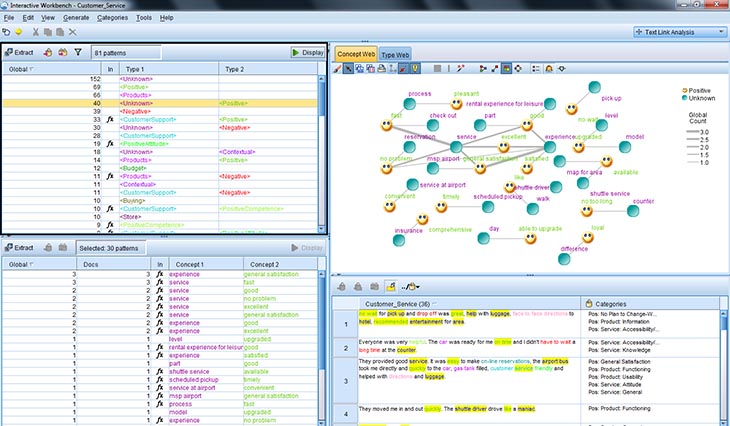

4. Modeler is intuitive, quick and easy to use.

Modeler’s visual interface is easy and quick to use. As a user you can easily investigate avenues or hunches that you have. Each model can be developed in seconds, so you can really play around with ‘throw away modelling’. If one model turns out to be irrelevant then try something else, just by pointing and clicking. Many other tools require specific programming or data queries to be made to allow this kind of ‘on the fly’ investigative work. The simplicity of Modeler’s interface means you’re more likely to try different angles, thereby getting more out of your data. It also means that it is easy to train users so the speed to solution is quick.

5. Produces models and rules which are easy to interpret.

In order to get buy in from all parts of the organisation you need to produce rules or models which are easy to interpret and use. Modeler excels at this kind of interpretation, even allowing specific evaluation tools to determine the most effective models. You don’t need a PHD to run these techniques – the software can run all the algorithms available and come back with the top 5 most effective for you to evaluate. You can then see side by side the results of each model in order determine which you want to use. This means that it’s quicker to get up to speed and use the software and you can always rely on the software to help you find the best model instead of a time-consuming manual process of manually working through all the possible techniques.

Analysts tend to have their ‘favourite’ algorithm that they always choose first but sometimes this can lead to bias. The open approach of Modeler can help remove this bias. Artificial intelligence tools (neural networks) are typically described as ‘black box’, meaning that whilst they may give really accurate predictions, they don’t give any clue about what those predictions are based on. This can make neural network models hard to deploy and use. Modeler is really good at getting to the bottom of these sorts of problems and making traditionally hard to use and therefore mistrusted techniques accessible.

6. Storage of a permanent audit trail.

If you are working in an area such as fraud detection then auditability is vital. Your need to know what was done with data and why. Modeler’s interface and ‘streams’ allow an audit trail to be stored permanently and so analysts don’t need to worry about their accountability – everything is transparent.

7. Wide range and combination of models and algorithms.

Modeler contains lots of different algorithms. It’s often the case that one particular algorithm will give a better prediction or selection criteria than another, so it’s useful to have access to as many as possible. Being able to select, evaluate and combine different models and algorithms is vital to getting a useful answer. I haven’t come across another tool that combines techniques together in the way that Modeler can. Having many models ‘voting’ on the best outcome is a really robust way of getting accurate results.

8. Working to a methodology or framework.

Modeler works to the CRISP-DM framework for data mining. It is important that both new and experienced data miners leverage work done by other organisations to solve similar business problems. With that in mind, Modeler is designed around the CRISP-DM framework to allow a shared and standard process model across the industry.

Perhaps the truth is it doesn’t matter what it’s called or how many copies there are out there. As long as people continue to derive value, business benefits and real cost savings from this software tool I will continue to call it both names to clarify with people in meetings, and I’ll put up with the American spelling too!