There are a number of configuration settings associated with IBM SPSS Modeler Server that control its behaviour. The default settings aim to ensure that stream execution will complete successfully even if the host machine is being used by a number of other applications i.e. Modeler Server is trying to be a “good citizen”. However, if Modeler Server is the primary application on the host, then tweaking these settings can reduce the execution times of some streams significantly.

One of these settings is cache_compression. The cache_compression setting is used to control whether data that gets spilled to disk is compressed before being written to temporary cache files. Spilling to disk might happen for example, if Modeler Server is sorting the data and can’t fit the all data within main memory (e.g. because the threshold indicated by memory_usage has been exceeded).

By default, cache_compression is enabled as this minimises the temporary disk space required by a particular stream to execute meaning more streams can execute in parallel or other applications can continue to work. If the host has a large amount of disk which Modeler Server can use then disabling cache_compression can allow some streams to execute 2-3 times faster. It may also reduce CPU usage significantly which can be important in metered environments.

The actual increase is dependent on a number of factors:

- number and size of the temporary caches created during stream execution – if most of the execution can be done using main memory then changing

cache_compressionis unlikely to have any effect - speed of the disks – if the disks are slow then savings made in the processing time by not compressing and decompressing the data may be overtaken by the overhead of writing and reading the larger caches

- how much data processing is done by the stream – this affects how much of the execution time is spent doing compression and decompression and therefore what proportion of the execution time is being saved

Another consideration is the disk space available for caching. If disk space is tight then disabling cache_compression may mean the stream is unable to run which is no use to anyone since a slow answer is better than no answer.

Assuming there is enough disk space then how do you change the settings?

One approach is to use the IBM SPSS Modeler Administration Console.

Alternatively you could directly edit the options.cfg file under /config folder in a text editor (be sure to take a copy of it first in case anything goes wrong). If you use the text editor approach, then open the options.cfg file, find the line:

cache_compression, 1

and change it to:

cache_compression, 0

which will disable cache compression. Once you have updated and saved the options.cfg file, you may need to restart your Modeller Server service for the changes to take effect.

Note that you can also change the options.cfg file on your Modeler client installation. However, this will only affect the cache_compression setting when you are running in local or desktop mode – remote Modeler Servers will need to be configured separately.

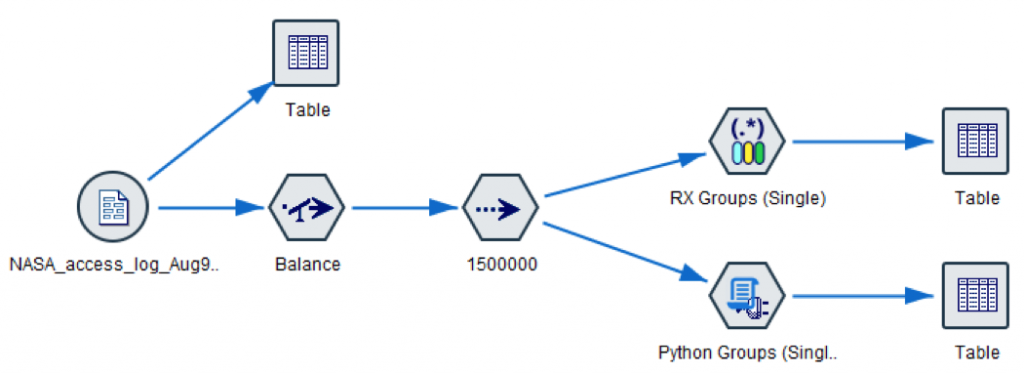

To test the possible effect, I ran a simple “micro-benchmark” stream. The customer_dbase.sav data set (132 fields, 5000 records) from the /Demos folder was duplicated using a balance node to 100 times the original number of rows and written to a new SAV file. This new file was then read, sorted and written to a table output. The stream was run 3 times for each compression setting, and the elapsed time and CPU times from the stream messages were noted.

Compression Enabled (cache_compression, 1):

| Elapsed (secs) | CPU (secs) |

|---|---|

| 22.99 | 34.48 |

| 20.26 | 34.40 |

| 21.44 | 33.98 |

Compression Disabled (cache_compression, 0):

| Elapsed (secs) | CPU (secs) |

|---|---|

| 9.88 | 8.70 |

| 8.16 | 9.06 |

| 8.20 | 8.80 |

(Note that the CPU time was greater than the elapsed time in some cases because multiple CPU cores were being used for some operations.)

This was run on my laptop which has a relatively slow standard laptop drive (5400 RPM). Even so, the savings in both elapsed and CPU time were significant – execution time was more than halved and CPU usage was less than a third when cache compression was disabled. I also ran this in a virtualised environment (different CPU and disk configuration) and saw similar savings.

However, the micro-benchmark stream almost certainly represents a best case scenario. Primarily this is because the processing done by the stream is minimal (sorting is a relatively simple operation) which means that the cache file compression and decompression is the dominant factor in the stream execution. If a stream is doing more data processing and/or model building, the savings are likely to be reduced. Also it may not be possible to run some streams because the uncompressed caches end up consuming all the disk space.

Nonetheless, it is worth exploring whether disabling cache compression improves overall performance in your environment.

This blog was first published on IBM’s developer site.